Human-centered AI in a misinformed world

As AI becomes more integrated into how we live, work, and think, it’s time to pause and ask: Are we trusting it too much, too soon? Even Sam Altman, CEO of OpenAI, thinks so.

In this second newsletter of HCXAI - Human-Centered AI - I will discuss the topic of AI hallucinations, something that I’ve been exploring lately. Hope you enjoy it! :-)

AI gaslighting

You don’t need to be a data scientist to grasp how AI works, but most people still don’t. A 2025 global study by KPMG found that nearly 47% of the population has low AI literacy, meaning they don’t fully understand how tools like ChatGPT operate. This includes a lack of awareness about one of AI’s most serious flaws: hallucination (when the system confidently generates information that’s false or misleading).

As AI becomes more embedded in daily life, this knowledge gap becomes real risks. When people don’t know that AI can make things up, they’re more likely to trust it blindly.

In fact, in the first episode of OpenAI’s official podcast, Sam Altman, CEO of OpenAI made a striking statement:

“People have a very high degree of trust in ChatGPT, which is interesting, because AI hallucinates. It should be the tech that you don’t trust that much.”

This was a serious nudge to the millions, perhaps billions, of people using tools like ChatGPT for everything from school essays to parenting decisions.

AI is powerful, but it’s not perfect. And if we don’t understand how the tech works, we may end up misusing it in ways that feel subtle but can have real consequences.

If you are interested in diving a bit more into the tech, I recommend reading this article by Prakhar Mehrotra, where he explains how AI works for non-technical audiences - and where the term hallucination comes from.

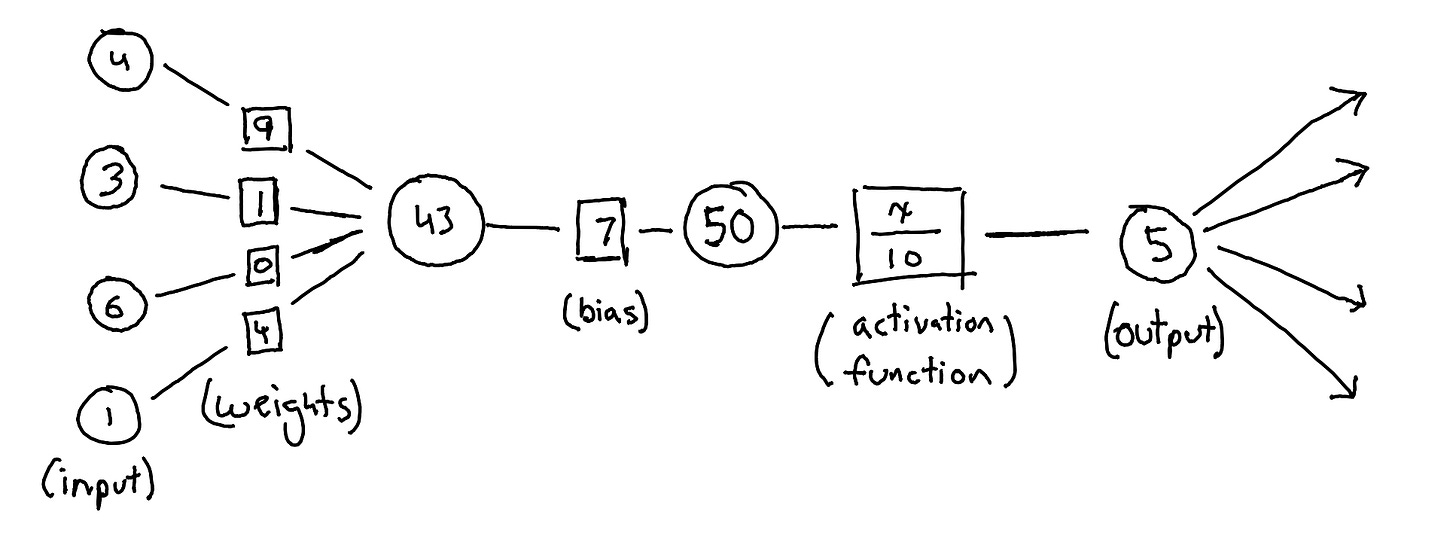

Here’s a sneapeak sketch from his article:

Using AI as our personal sounding board

Increasingly, people are turning to ChatGPT and other chatbots for guidance on personal decisions, emotional support, or even health advice. Why? Because they’re always available, cheap(er), they don’t judge, and they respond with fluency and empathy, in every language possible.

For many, AI has become a kind of therapeutic mirror, a sounding board that offers structure and calm when human support feels too expensive, too slow, or too intimidating.

TikTok is filled with videos of users describing how they rely on ChatGPT as a life coach or even a therapist. Popular influencers and other users describe using it to get help with breakups, career choices, anxiety spirals, and parenting dilemmas.

A 2025 survey by Axios found that more than half of Gen Z users reported feeling comfortable confiding in AI chatbots about emotional issues. In parallel, studies from Stanford and MIT have highlighted how emotionally vulnerable users often perceive AI-generated responses as deeply empathetic, even when they are factually incorrect or ungrounded in any real experience.

Sounding correct vs. Being correct

ChatGPT is incredibly good at sounding correct.

It speaks in clear, confident, coherent sentences that mimic human communication. But that doesn’t mean it knows what it’s talking about. At its foundation, ChatGPT is a large language model trained to predict what word or phrase is most likely to come next, not to find the truth.

The technical term for when AI generates false information is hallucination. And it’s a well-known issue in the AI community. ChatGPT doesn’t lie because it has intent, it hallucinates because it lacks understanding. It doesn’t know truth from fiction, it only recognizes patterns. That’s why it can sometimes generate completely fabricated facts, citations, or medical advice with the tone and confidence of an expert.

This blind trust becomes especially dangerous when AI steps into domains that require empathy, nuance, or lived experience.

What is happening behind the scenes?

Even when ChatGPT has access to the internet, that doesn’t mean you can trust it to always deliver accurate information. Real-time browsing can surface recent content, but the model still can’t critically evaluate sources or verify facts.

Unfortunately, that dataset often includes misleading, outdated, or low-quality content. Without proper safeguards, ChatGPT can confidently repeat misinformation sourced from unreliable sites, making it sound polished and convincing even when it isn’t. As one Time analysis put it, AI tools “often can’t nail down verifiable facts” and “present inaccurate answers with alarming confidence”.

In fact, most people don’t verify what they’re told. A 2024 survey by Inc. found that 92% of users don’t fact-check AI-generated answers, especially when the responses seem confident and well-written. That means false information is absorbed and acted on.

Even state-of-the-art models like GPT‑4 still produce hallucinations, with error rates around 1.8%, and newer "reasoning" models sometimes hallucinating even more.

According to OpenAI’s internal tests, o3 and o4-mini, which are so-called reasoning models, hallucinate more often than the company’s previous reasoning models — o1, o1-mini, and o3-mini — as well as OpenAI’s traditional, “non-reasoning” models, such as GPT-4o. TechCrunch

RAG based solutions

Thankfully, not all AI systems suffer from hallucination in the same way. A new generation of tools and companies is tackling this problem using RAG: Retrieval-Augmented Generation.

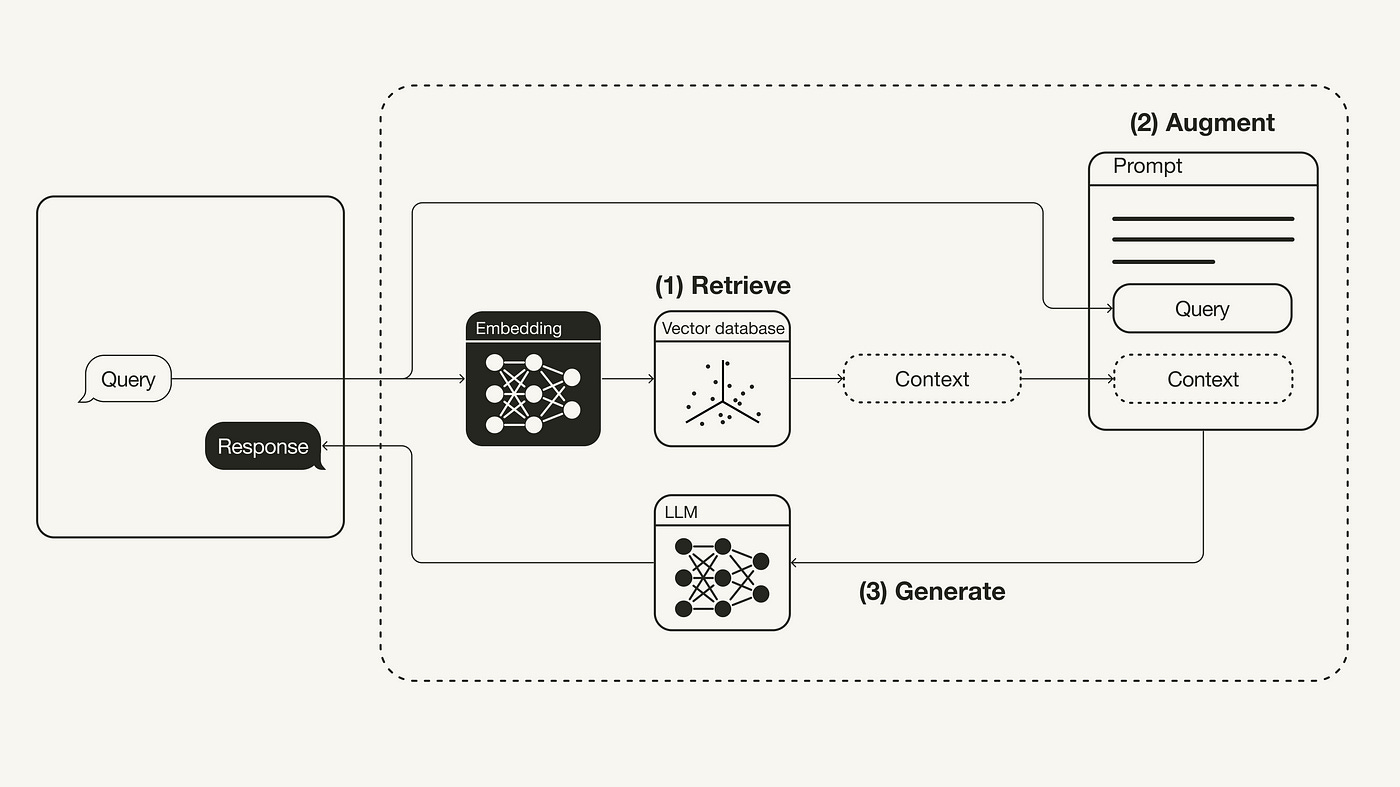

When a user submits a query, the system first converts it into a vector using an embedding model and retrieves relevant documents from a vector database (Retrieve).

This retrieved information, or context, is then combined with the original query to form an augmented prompt (Augment).

The augmented prompt is passed to a large language model, which generates a response based on both the query and the retrieved context (Generate).

This process grounds the model’s output in factual, relevant data, reducing hallucinations and improving reliability.

RAG combines the power of language models with external databases. Instead of generating answers from memory, these systems first retrieve relevant documents or facts from a trusted source, and then generate a response only grounded in that information. This reduces the risk of hallucination and makes the answers more reliable.

Some of the most successful RAG-based companies and tools include:

Kapa.ai – Used by tech companies to power support bots that give accurate, documentation-based answers. Perfect for developer or customer questions.

Glean – Helps companies search across internal documents (e.g., Slack, Notion, Confluence) and answer employee questions reliably.

Danswer – An open-source enterprise search platform that connects to internal tools and uses RAG to provide source-backed answers.

Humata.ai – These tools allow users to upload documents and get Q&A answers directly pulled from the file contents — nothing made up.

What unites all these platforms is a commitment to ground truth. Rather than letting the AI wing it, they constrain it to trusted material a critical shift in building trustworthy AI experiences.

Human-centered AI means training people on critical thinking and on how the tech works.

At the heart of all this is a bigger question. How do we, as users, developers, policymakers, ensure that AI earns our trust responsibly?

Like Sam Altman said on this podcast, “we need societal guardrails… If we’re not careful, trust will outpace reliability.”

That means better education about how AI works. It means clear transparency from companies about what a tool can and can’t do. And most of all, it means developing AI systems that are accountable.

We must learn to treat AI as a powerful tool, not a source of the entire truth. And like any tool that interacts with people, it must be shaped by ethics, not just engineering.

The best way to use AI is not to trust it blindly. So go ahead: ask ChatGPT for ideas, summaries, drafts, and suggestions. But when it comes to your health, your finances, your legal rights, or your emotional well-being, remember who’s ultimately in charge.

If we want to build technology that is human-centered, we need to stay aware of how it shapes our thoughts, choices, and relationships. That begins with understanding what is happening now and what kind of future we want to create.