Welcome to HCXAI: My first newsletter exploring the topic of human-centered AI.

Why this newsletter, and why now? What does Jony Ive have to do with AI's next chapter? Are we finally shifting from what "AI can do" to what people need AI to do?

Dear readers,

Welcome to the first newsletter of my new baby project where I’m excited to explore the topic of HCXAI (Human-Centered AI). I promise you that this is not another newsletter about what AI can do, it's a newsletter about what humanity needs AI to do.

If you’re a designer, developer, founder, or simply curious about the role AI will play in our lives, and in humanity more broadly, you’re in the right place. :)

With this newsletter, I hope to offer new insights and reflections on the times we’re living in, and help you think more intentionally about the relationship you want to have with technology.

What role should AI play in your work, your personal life, and your sense of self - both now and in the future?

I believe we’re not far from a world where AI is embedded into everything, not just our phones (if we’ll still call them that - see picture below), but possibly even into our bodies.

That’s why I want to start reflecting on these questions now. This newsletter is about anticipating what’s to come, and reflecting on what we want AI to do, and just as importantly, what we don’t.

Along the way, we’ll explore ideas from philosophers, speculative futurists, and critical thinkers who are helping shape that conversation.

So let’s start with, why HCXAI, and why now?

AI is evolving at a crazy fast speed. Each week we are reading about new tools, and new applications of the technology that are capable of writing essays, generating realistic images, and simulating friendships, therapists, you name it.

I personally find these advances impressive, but also, sometimes, overwhelming.

Overwhelming because I believe we are, most of the times, not asking the right questions. We are thinking “technology first” like, what can this technology do? rather than, what do people need? What can we solve for them with this technology? and what can we ask the AI to do for us, that really matters and creates a better impact in our lives, communities, development, and in general - in our humanity?

My goal with HCXAI is to open a new conversation from AI’s tech capabilities to its meaningful integration into our lives. How do we align AI technology with our values, communities, and vision for the future?

Why do I care? My personal motivation.

For those who know me, I’ve spent about ten years in the design and innovation industry. From going to uni to study design engineering to specializing in interaction design later on for my masters.

Since I was very young, I’ve been fascinated by the intersection of humanity and technology. It all started with a childhood dream of becoming a spy (blaming the movie Spy Kids, of course!), obsessed with gadgets, walkie-talkies, and anything that felt like a secret tool.

I’d play for hours, imagining how technology could unlock new ways of seeing and doing. That curiosity grew with time: from building things with the 3D printer during my engineering school, to experimenting with augmented and virtual reality during my internship, and now, diving into the world of AI.

But it’s never just been about the tools themselves. What truly matters for me is what happens when these technologies land in human hands, how they change the way we live, work, create, and connect. That’s the intersection I care most about.

A new inflection point in human history

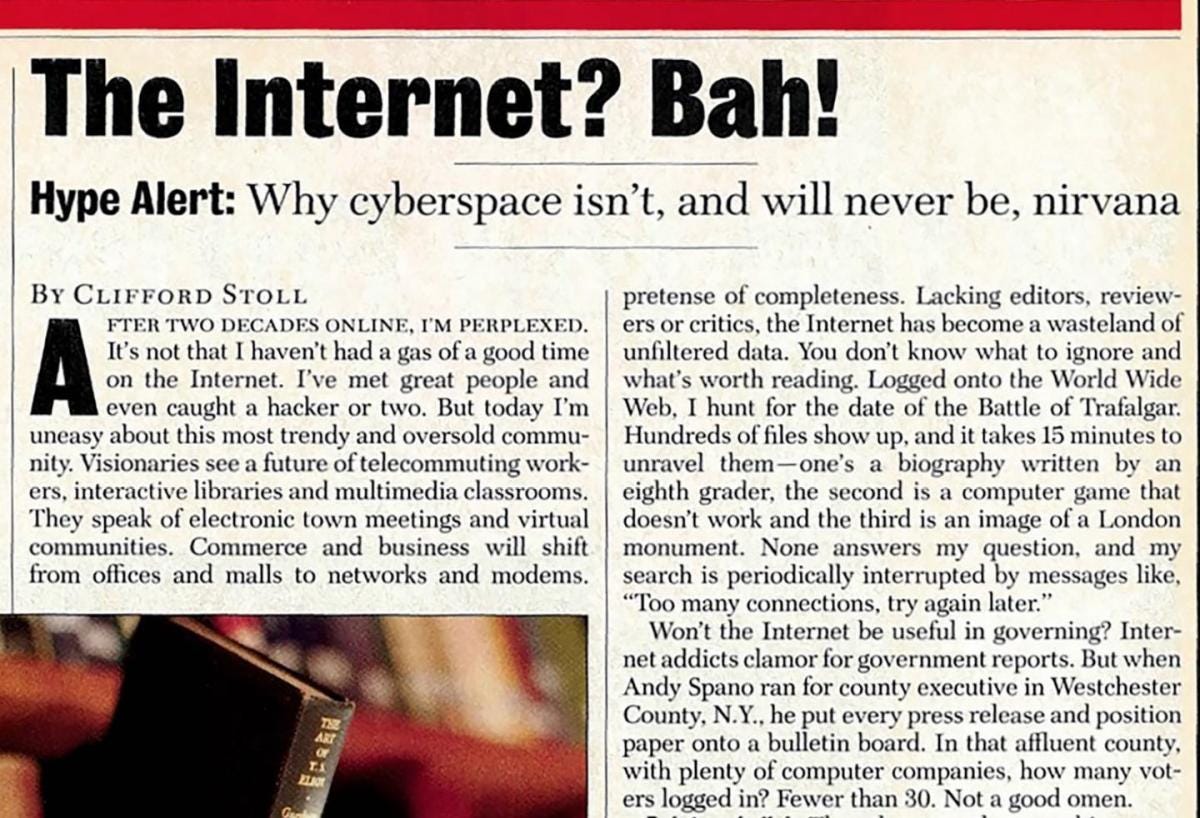

Born in 1995, I didn’t experience the moment the internet first appeared, but I’ve heard endless stories about that “before and after” moment from my parents, that transformed how we live, communicate, and work. At the time, some people doubted it would change much (see image below). Yet, in hindsight, it changed everything.

It was a moment full of both promise and consequence. The internet gave us instant access to information, new ways to connect, and powerful tools for creativity.

So why now?

I believe we’re standing at a new inflection point in human history, one shaped by the advancement of artificial intelligence.

The ways we think, communicate, and create are evolving faster than ever. And this is just the beginning. The pace of change is accelerating exponentially, reaching into parts of our lives we haven’t even fully imagined yet.

We're already seeing early signs of AI becoming deeply embedded in the human experience, not just in our devices, but potentially in our bodies and minds.

As some of you know, I have been considering starting this newsletter for a while, but six days ago some big news pushed me to go all in, as we Spaniards say, to tirarme de cabeza a la piscina. So here we are. And here’s why.

Jony Ive, OpenAI, and a $6.5 Billion Signal

Six days ago, OpenAI confirmed a $6.5 billion deal to acquire io, the AI-hardware spin-out founded by Jony Ive, and to put Ive’s studio LoveFrom in charge of design across all OpenAI products.

So why is this important?

“AI is an incredible technology, but great tools require work at the intersection of technology, design, and understanding people and the world”

“No one can do this like Jony and his team.”

For much of the past decade, progress in AI was measured by scale: larger datasets, more parameters, faster training. This partnership means a change toward experience-led innovation, echoing the moment when the iPhone reframed the way we communicate and interact with technology daily.

According to this article from The Verge, Altman and Ive are working on a pocket-sized, screen-free, context-aware companion that will be ready for 2026. Altman has said the first device “is a totally new kind of thing,” not a phone replacement but an ambient tool that blends into daily life.

“I am absolutely certain that we are literally on the brink of a new generation of technology that can make us our better selves,” (Ive, May 2025)

Google CEO Sundar Pichai called Ive “one of a kind,” noting that the tie-up “could be as transformative as the birth of the internet.”

Business Insider Venture analysts already compare the move to Apple’s 2007 iPhone reveal, arguing it may redefine default AI form factors much as multitouch screens once did.

Instead of asking what AI can do, OpenAI is asking how AI should feel for people, a question at the heart of human-centered design. Where intelligence alone isn’t enough, and the future belongs to AI that looks, feels, and behaves in ways people intuitively trust and enjoy.

Understanding the term of Human-Centered AI (HCXAI)

Human-centered AI focuses on designing technology with people at the forefront of the process. Although this is not such a popular topic yet, many academics, philosophers and corporates are already championing this approach to understanding AI. Some examples are:

Stanford Institute for Human-Centered AI (HAI): Facilitates collaboration among researchers, policymakers, and designers to ensure AI enhances human well-being.

Oxford Human-Centered AI Lab: Combines philosophy and technology to make AI systems ethical, transparent, and understandable.

Google’s PAIR (People + AI Research): Specializes in making AI accessible and inclusive through user-friendly tools and co-creation processes.

These initiatives are highlighting the ongoing gap in how we understand and engage with AI. Across academia and industry, stakeholders from diverse backgrounds are beginning to ask deeper, more critical questions, not just about what AI can do, but what it should do.

At the heart of these conversations is a growing recognition that ethics, human impact, and societal values must move from the sidelines to the forefront of AI development.

Each edition of HCXAI will explore various aspects of this movement, from innovative startups using AI in a human way, insightful research papers, and insights from interviews with philosophers and designers actively shaping AI's responsible evolution.

Why should we care about humanity + AI?

As artificial intelligence becomes increasingly powerful, it raises urgent questions.

What does it mean to be human in an AI-driven world? What sets us apart, and what truly matters? While AI can deliver speed, efficiency, and precision, there are distinctly human experiences like empathy, creativity, and resilience, that technology cannot replicate (or at least, not yet).

On The Diary of a CEO, Simon Sinek talks about the importance of doing hard thing in order to grow. He warns that relying on AI to shortcut our way to outcomes is dangerous, because “the journey matters more than the destination.” True growth comes from repetition, friction, and failure, from the uncomfortable but essential process of figuring things out the hard way. AI might give us answers instantly, but it skips the struggle, and with it, the learning, emotional depth, and sense of purpose that come from the process itself.

Its like saying, AI will provide boats for everyone, excepts for when the time there is a storm - and you don’t know how to swim.

This newsletter will also explore how our increasing dependence on AI might be subtly reshaping us as individuals and as a culture.

If we use AI for everything, what skills, sensitivities, or instincts might we be losing?

What habits are we building without even noticing? These are the kinds of questions we believe are worth asking, because the way we use AI shouldn’t be seen only as a tech decision, but as a human one.

Looking ahead

Hope you enjoyed the first newsletter of HCXAI, if you have any feedback, please feel free to email me. I would love to hear how you feel about the topic I’m writing about.

Thank you for reading the first newsletter :)

—Alejandra Godo, Founder & CEO, Blas&Co.

HCXAI is powered by Blas&Co, a venture studio that builds products & services that bring the world forward. Through this newsletter, we aim to create domain expertise and advance dialogue around human-centered AI, arguably one of the defining topics of our time.

![Amazon.com: Spy Kids [DVD] : Alexa Vega, Daryl Sabara, Antonio Banderas, Carla Gugino, Alan Cumming, Tony Shalhoub, Teri Hatcher, Cheech Marin, Robert Patrick, Danny Trejo, Mike Judge, Richard Linklater, Robert Rodriguez, Robert Amazon.com: Spy Kids [DVD] : Alexa Vega, Daryl Sabara, Antonio Banderas, Carla Gugino, Alan Cumming, Tony Shalhoub, Teri Hatcher, Cheech Marin, Robert Patrick, Danny Trejo, Mike Judge, Richard Linklater, Robert Rodriguez, Robert](https://substackcdn.com/image/fetch/$s_!spPy!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fa4dd1c71-7651-43b5-b8a7-c8b0452fc97c_354x500.jpeg)

I love that you're asking the right question - what does humanity need AI to do. Too often innovations are led in a product/tech push kind of way. High time we focus on the human side, as well as the more philosophical angles. Following this with great interest!!

This is a really important issue: my big fear (=doubt) is how society will develop AI while keeping the tool free from the influence of entities interested in extracting the greatest economic benefit. We have all seen, for example, how META has been, little by little, gaining space in our lives and that, knowing this, we have allowed it.

I would say that the big question is: How can we develop this tool and offer its benefits universally and free of charge, so that everyone can benefit from its help without paying the toll of manipulation for economic or political reasons?